MedOS combines smart glasses, cobots, and AI. Source: Stanford University

Developers are finding ways to combine artificial intelligence, augmented and virtual reality, and robotics for useful applications. The Stanford-Princeton AI Coscientist Team today launched MedOS, which it claimed is “the first AI–XR-cobot system designed to actively assist clinicians inside real clinical environments.”

More than 60% of physicians in the U.S. have reported symptoms of burnout, according to recent studies. The Stanford and Princeton researchers said they designed MedOS to alleviate burnout, not by replacing clinicians, but by reducing cognitive overload, catching errors, and extending precision through intelligent automation and robotic assistance.

“The goal is not to replace doctors. It is to amplify their intelligence, extend their abilities, and reduce the risks posed by fatigue, oversight, or complexity,” stated Dr. Le Cong, co-leader of the interdisciplinary project and an associate professor at Stanford University. “MedOS is not just an assistant. It is the beginning of a new era of AI as a true clinical partner.”

MedOS incorporates feedback loop

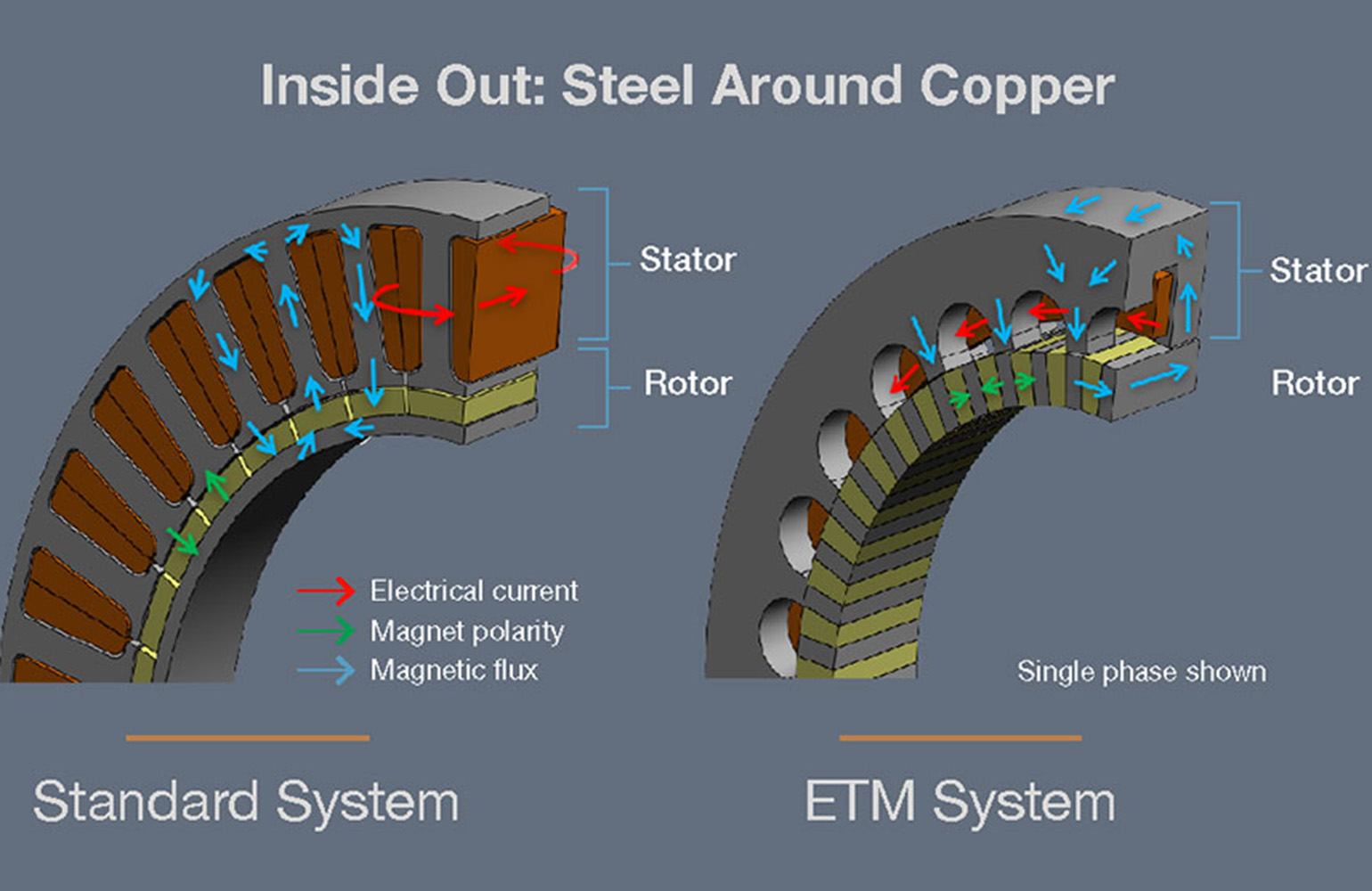

“Medicine historically separates abstract clinical reasoning from physical intervention, said the researchers in a paper. “We bridge this divide with MedOS, a general-purpose embodied world model. Mimicking human cognition via a dual-system architecture, MedOS demonstrates superior reasoning on biomedical benchmarks and autonomously executes complex clinical research.”

MedOS combines smart glasses, robotic arms, and multi-agent AI to form a real-time co-pilot for doctors and nurses, said the Stanford-Princeton AI Coscientist Team. Its mission is to reduce medical errors, accelerate precision care, and support overburdened clinicians.

The scientists built on years of experience with LabOS to bridge digital diagnostics with physical action in MedOS. They said the system can perceive the world in 3D; reason through medical scenarios; and act in coordination with doctors, nurses, and care teams.

MedOS also introduces a “world model for medicine” that incorporates perception, intervention, and simulation in a continuous feedback loop. Using smart glasses and robotic arms, it can understand complex scenes, plan procedures, and execute them in close collaboration with clinicians.

“The data layer enables us to build the world model with the spatial intelligence to allow robotics to work with humans today rather than wait for fully humanoid robots,” Dr. Cong told The Robot Report. “There are very few robots in hospitals now other than [Intuitive Surgical’s] da Vinci. We want to bring robots into every single part of medicine.”

The platform has been tested in surgical simulations, hospital workflows, and live precision diagnostics. The researchers said it has shown early promise in tasks such as laparoscopic assistance, anatomical mapping, and treatment planning.

Schematic overview of the MedOS architecture bridging the digital world and the physical world. (Click here to enlarge.) Credit: Mengdi Wang, Le Cong, et al.

Stanford-Princeton AI Coscientist Team builds modular system

The researchers said they designed MedOS to be modular and adaptable across clinical settings and specialties. In surgical simulations, it has demonstrated the ability to interpret real-time video from smart glasses, identify anatomical structures, and assist with robotic tool alignment.

The team has developed its own tactile sensors to work with force- and power-limited robot arms, said Cong. It works with off-the-shelf smart glasses and cameras to collect training data, which complements publicly accessible databases.

MedOS integrates perception, planning, and action, functioning as a clinical co-pilot and an active collaborator in high-stakes procedures, said the Stanford-Princeton AI Coscientist Team. Its capabilities include:

- A multi-agent AI architecture that mirrors clinical reasoning logic, synthesizes evidence, and manages procedures in real time

- MedSuperVision, an open-source medical video dataset featuring more than 85,000 hours of surgical footage across procedures

- Demonstrated success in helping nurses and medical students reach physician-level performance and reducing human error in fatigue-prone environments

- Case studies, including uncovering novel immunotherapy resistance pathways through large-cohort data integration

“We need to first generate the world model,” said Cong. “By giving intelligence from physicians, clinicians, and nurses, our AI brain could be the foundation for deploying fully autonomous humanoids someday.”

In the meantime, it is working initial deployments in hospital logistics and laboratories. For logistics, MedOS can help quickly deploy robots to move blood samples and supplies.

Labs are a good place to start because they are not patient-facing and are therefore simpler to ensure that testing and diagnosis are faster and error-free, Cong explained.

Early pilots lead to GTC unveiling

MedOS is launching with support from NVIDIA, AI4Science, Nebius, and VITURE. It has been deployed in early pilots at Stanford, Princeton, and the University of Washington. Clinical collaborators can now request early access.

“We’re just starting to work with clinicians on testing surgical procedures on a mock body,” Cong said. “We want to make sure that for patient-facing applications, we’ve already tested with different levels of physicians and simulations before moving into actual clinical settings. MedOS has already proven to be robust and better than Gemini for spatial tests. It could be very flexible for surgical automation.”

MedOS will be showcased at a Stanford-hosted event in early March, followed by a public unveiling at NVIDIA’s GPU Technology Conference (GTC). Media, clinicians, and research institutions interested in early demonstrations or interviews may contact the MedOS team for coordination.

The Stanford-Princeton AI Coscientist Team is dedicated jointly building real-time AI systems designed to work alongside human scientists and clinicians. It said LabOS and MedOS are deployed across leading universities and hospitals to accelerate discovery, reduce human error, and improve scientific and clinical outcomes.

“We’re in touch with hospital systems in the Northwest and soon the East Coast,” said Cong. “We’re sending data-collection tools to other partner institutions and for the next version, which will use massive amounts of benchmark data. It’s an ongoing progress, and multiple institutions are interested in MedOS.”

The post Stanford, Princeton scientists launch MedOS AI-XR-cobot clinical system appeared first on The Robot Report.